The Unshredder

So this is my solution to the Instagram Engineering Challenge: The Unshredder I submitted via email last Tuesday.

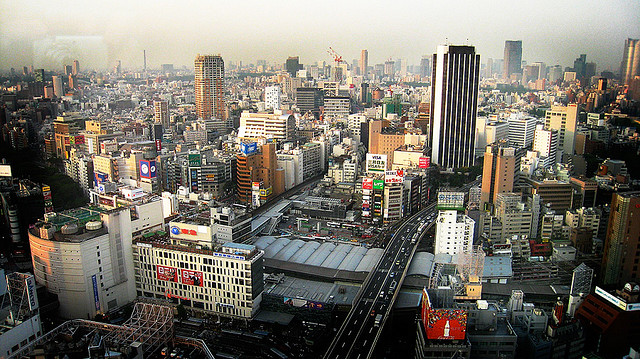

It was a lot of fun because it’s a great problem, gave me a chance to try out Python for the first time, and allowed me to whet my appetite for image analysis. For those not familiar, it was a challenge to write a script that reconstructs a shredded photo of the Tokyo skyline.

The algorithmic solution should work for most other shredded images, but it’s not meant to be perfect. With pattern detection and edge matching you can always get more complex and comprehensive. Here were their guidelines.

TIPS

1) Don’t overthink it. Use of really complex algorithms isn’t needed. Our solution WITH the bonus exercise comes in at just over 150 lines of python.

2) Think about how you would quantify whether or not two shreds ‘fit’ together by using pixel data

3) Assume you’re using the source image, or other normal photographs without edge-case patterns.

4) There are edge cases where the script we wrote with our approach will not work because of repeating patterns. This is OK in your script as well. Don’t worry about special cases – focus on making the sample images work that we’ve provided.

5) Bonus Challenge: If you decide you want to auto-detect how many columns there are in an image, you should remember that there are a finite amount of columns that are possible given an image of a certain width if you assume columns are evenly distributed and uniformly sized.

Basically, I took this to mean to find the simplest solution I could, trying to come up with something under 150 lines.

The funny thing is that I spent more time than I wanted to just getting PIL installed on my MacBook. I finally compiled jpeg-8c and Imaging-1.1.7 from source using ARCHFLAGS=”-arch i386 -arch x86_64″ and all was good.

After that, I perused the PIL documentation and first thought I might try the histogram method in the Image module, but I couldn’t quickly find any useful patterns for edge matching. So, I moved on and saw the difference method in the ImageChops module. That would be my key to a simple and effective-enough solution.

In short, I used the difference method to calculate the absolute difference between a one pixel wide edge of a shred and the matching/mismatching one pixel wide edge of another shred. In theory, the absolute difference of the matching edges should produce a close-to-black one pixel wide image. I reduced that one pixel wide image down to a value that could be compared by calculating the average distance from pure black for all the pixels.

I started building the unshredded image with the first shred, then compared all the shreds to the edges of that developing image, adding shreds to the left and right until the image was complete.

I used that same method to auto-detect the column width. I just had to establish a threshold for how abruptly the average distance from pure black would change between shreds.

Here’s my unshredded image.

I’d like to explore more complex algorithms for line detection and edge matching. I did start to investigate the Hough transform for line detection. I thought maybe simply calculating the derivative to identify the rate of change in color could be enough to figure out where the shred edges are and whether there is a match when trying to arrange them.

I may write a new version of my script to utilize something that works a greater percentage of the time, but for now I’m satisfied with my 106 lines of Python.